Dream-Coder 7B

Introducing Dream-Coder 7B, the most powerful open diffusion large language model for code to date.

Team: Zhihui Xie*, Jiacheng Ye*, Lin Zheng*, Jiahui Gao*, Jingwei Dong, Zirui Wu, Xueliang Zhao, Shansan Gong, Xin Jiang, Zhenguo Li†, and Lingpeng Kong†

Affiliations: The University of Hong Kong, Huawei Noah’s Ark Lab

(*Equal contribution. †Core advising. )

Introducing Dream-Coder 7B

In a joint effort with Huawei Noah’s Ark Lab, we release Dream-Coder 7B, the first fully open-source diffusion LLM for code that provides complete transparency throughout its development pipeline, with all components publicly available – data processing scripts, implementation code, and model weights.

Text diffusion models represent a fundamental shift away from autoregressive LLMs. This emerging direction has attracted significant attention across academia and industry

Features

Flexible Code Generation

We observe Dream-Coder 7B exhibits emergent any-order generation that adaptively determines its decoding style based on the coding task. For example, Dream-Coder 7B Instruct displays patterns such as:

- Sketch-first generation: For problems that read inputs from standard input and write outputs to standard output (Figure 1), Dream-Coder 7B Instruct begins by sketching the entire entry-point scaffold, then works backward to implement and refine helper functions and core logic.

- Left-to-right generation: For single-function completions (Figure 2), Dream-Coder 7B Instruct writes almost linearly—starting at the def header and moving left-to-right.

- Interleaved reasoning generation: For code reasoning tasks that require predicting output from code and input (Figure 3), Dream-Coder 7B Instruct first echoes the given input, then walks through the program step by step, jotting down each calculation and filling in output lines as soon as it figures them out.

These demos were collected using consistent sampling parameters: temperature=0.1, diffusion_steps=512, max_new_tokens=512, alg="entropy", top_p=1.0, alg_temp=0.0, and pad_penalty=3.0.

Variable-Length Code Infilling

One of the biggest challenges for diffusion LLMs is their lack of natural capability to generate variable-length sequences. This limitation is particularly problematic for infilling—generating code that seamlessly fits between existing snippets. We introduce an infilling variant, DreamOn-7B , that naturally adjusts the length of masked spans during generation by introducing two special tokens, <|expand|> and <|delete|>, which dynamically expand or contract the mask region to match the required infill length (Figure 4 and Figure 5). This capability allows the model to handle variable-length code infilling tasks more effectively, without prior knowledge of the target sequence length.

For more details, please refer to our accompanying blog post for our variable-length generation method DreamOn.

Adaptation

Dream-Coder 7B belongs to the family of discrete diffusion models

Post-training

Our post-training recipe consists of supervised fine-tuning and reinforcement learning from verifiable rewards.

Supervised Fine-tuning

We use 5 million high-quality training samples from Ling-Coder-SFT, which includes a diverse range of programming tasks across multiple languages and corpora.

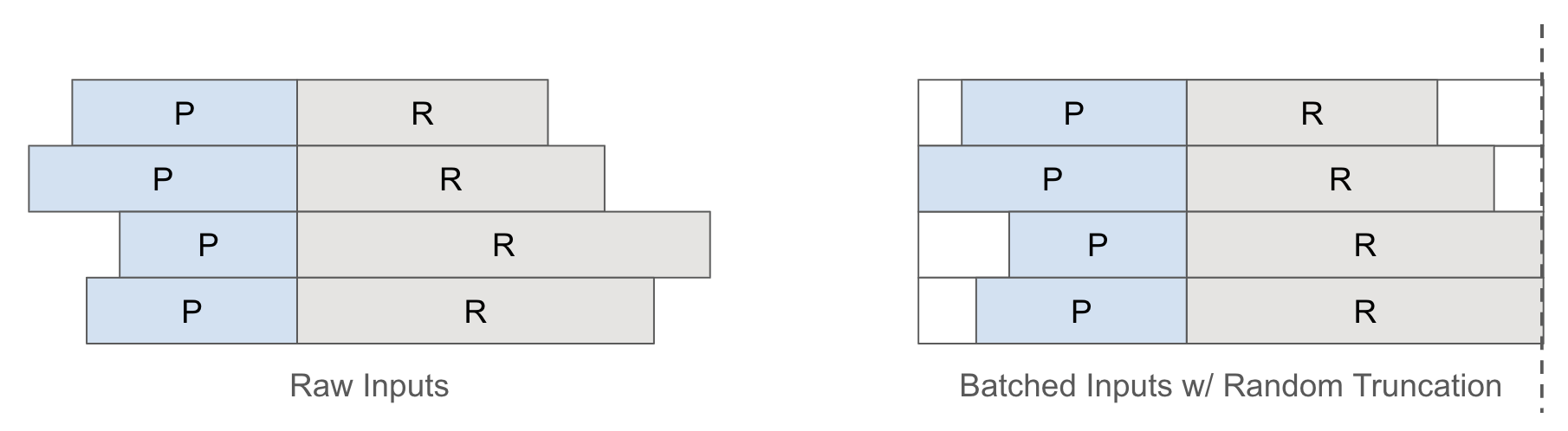

In our early experiments, we observed low sample efficiency and generation instability issues due to losses on [PAD] tokens (used as end-of-sequence markers). Specifically, when applying a simple max-length padding strategy, we observed:

- Low sample efficiency: A large portion of compute is wasted on [PAD] tokens, dominating the loss and causing overfitting while slowing effective token learning.

- Generation instability: Since responses are all padded with [PAD], the model tended to produce short outputs during inference.

To address these issues, we implement Random Truncation and [PAD] penalty. As illustrated below, we randomly select a sample from the batch and truncate responses based on its length during training. This improves sample efficiency and avoids over-padded outputs. During inference, we apply a penalty to the logits of the [PAD] token to prevent its premature generation. This penalty term is gradually annealed as decoding progresses. Through this mechanism, the model initially prioritizes generating meaningful tokens and considers termination in the later decoding stage. Additionally, as in adaptation training, we apply Context-adaptive Token-level Noise Rescheduling to dynamically adjust noise based on context complexity to improve training efficacy.

Reinforcement Learning with Verifiable Rewards

Inspired by the success of RL with verifiable rewards

We use the GRPO algorithm

- No entropy & KL loss: We eliminate entropy loss as it often results in training instability. Likewise, KL loss prevents exploration and requires additional computation for the reference policy.

- Clip-higher: We use an asymmetric clipping range for importance sampling ratios in policy updates, raising the upper bound to encourage exploration of low-probability tokens.

- Coupled sampling: For each batch, we sample complementary masks to estimate token-level log-likelihoods, which increases sample efficiency and reduces variance.

- Intra-batch informative substitution: For each batch, we randomly duplicate samples with nonzero advantages to replace those yielding zero advantage, ensuring every batch provides informative learning signals.

To speed up training, we adopt Fast-dLLM

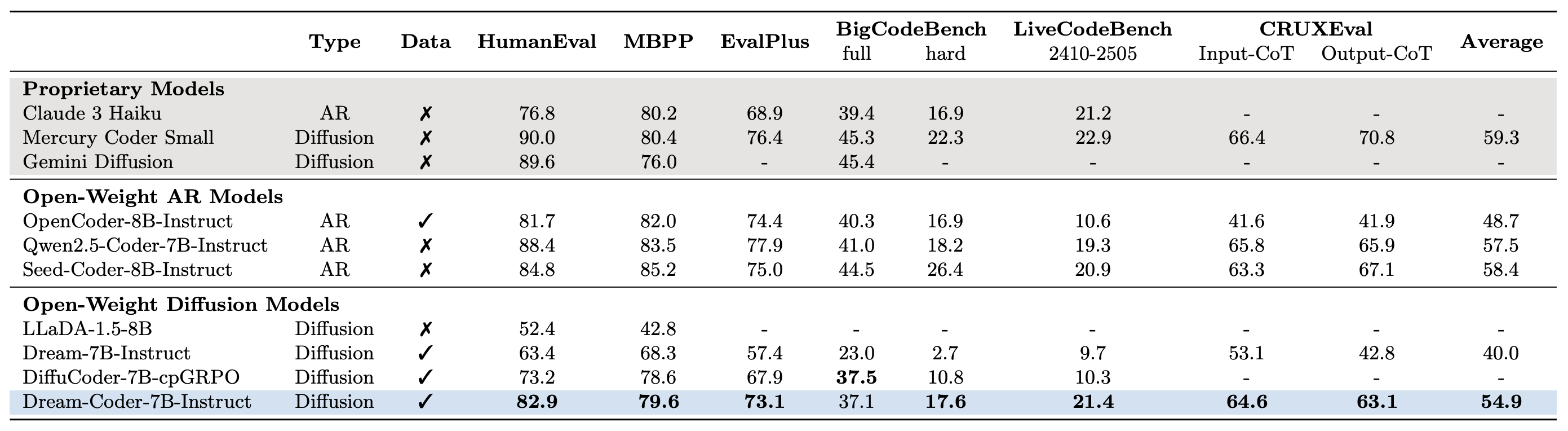

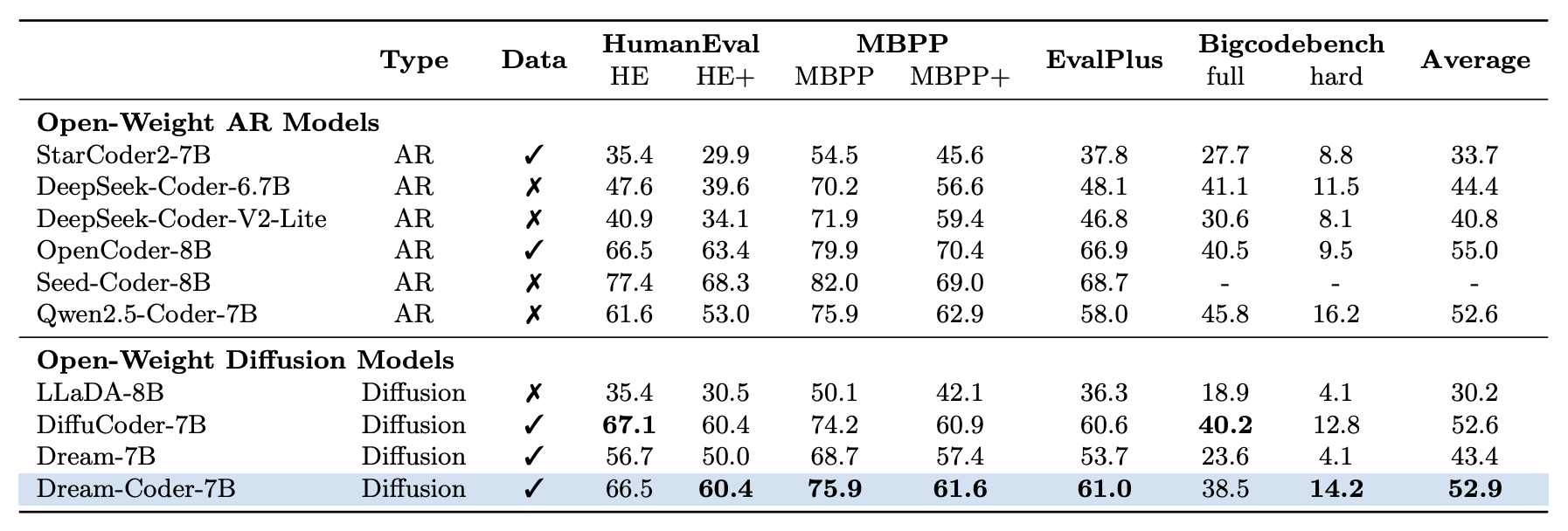

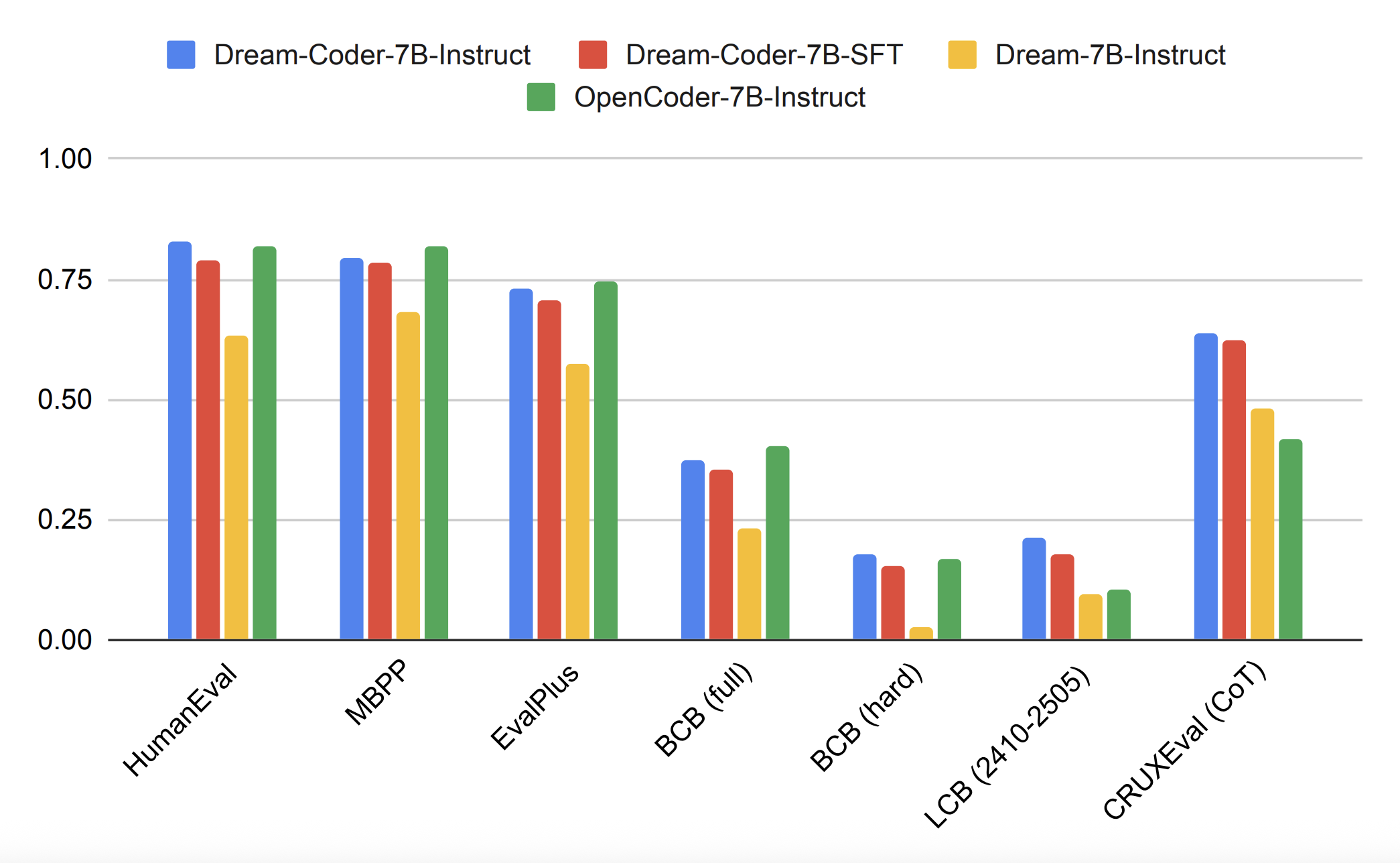

Our final model, Dream-Coder 7B Instruct, delivers strong performance across standard benchmarks, including HumanEval, MBPP, BigCodeBench, LiveCodeBench, and CRUXEval. Notably, trained solely on publicly available data, Dream-Coder 7B Instruct outperforms OpenCoder 8B Instruct

Conclusion

Dream-Coder 7B represents a continuation of our efforts to enhance open-source diffusion LLMs, with particular focus on post-training improvements. Trained entirely on open-source data, it delivers competitive performance in code generation. Future efforts will explore context extension and improved data curation to further boost Dream models’ capabilities.

Citation

@misc{dreamcoder2025,

title = {Dream-Coder 7B},

url = {https://hkunlp.github.io/blog/2025/dream-coder},

author = {Xie, Zhihui and Ye, Jiacheng and Zheng, Lin and Gao, Jiahui and Dong, Jingwei and Wu, Zirui and Zhao, Xueliang and Gong, Shansan and Jiang, Xin and Li, Zhenguo and Kong, Lingpeng},

year = {2025}

}