Dream-VL & Dream-VLA

Introducing Dream-VL and Dream-VLA, our latest open diffusion Vision-Language and Vision-Language-Action models.

Team: Jiacheng Ye*, Shansan Gong*, Jiahui Gao†, Junming Fan, Shuang Wu, Wei Bi, Haoli Bai, Lifeng Shang, and Lingpeng Kong†.

Affiliations: The University of Hong Kong, Huawei Technologies

(*Equal contribution. †Corresponding author.)

Introducing Dream-VL and Dream-VLA

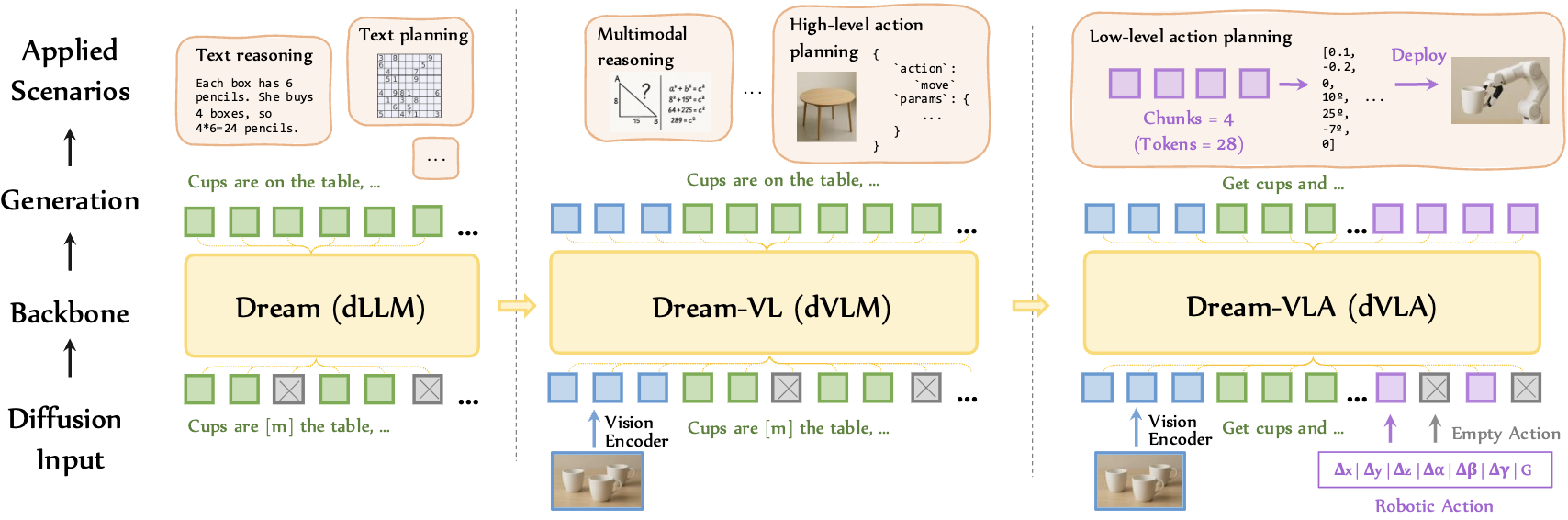

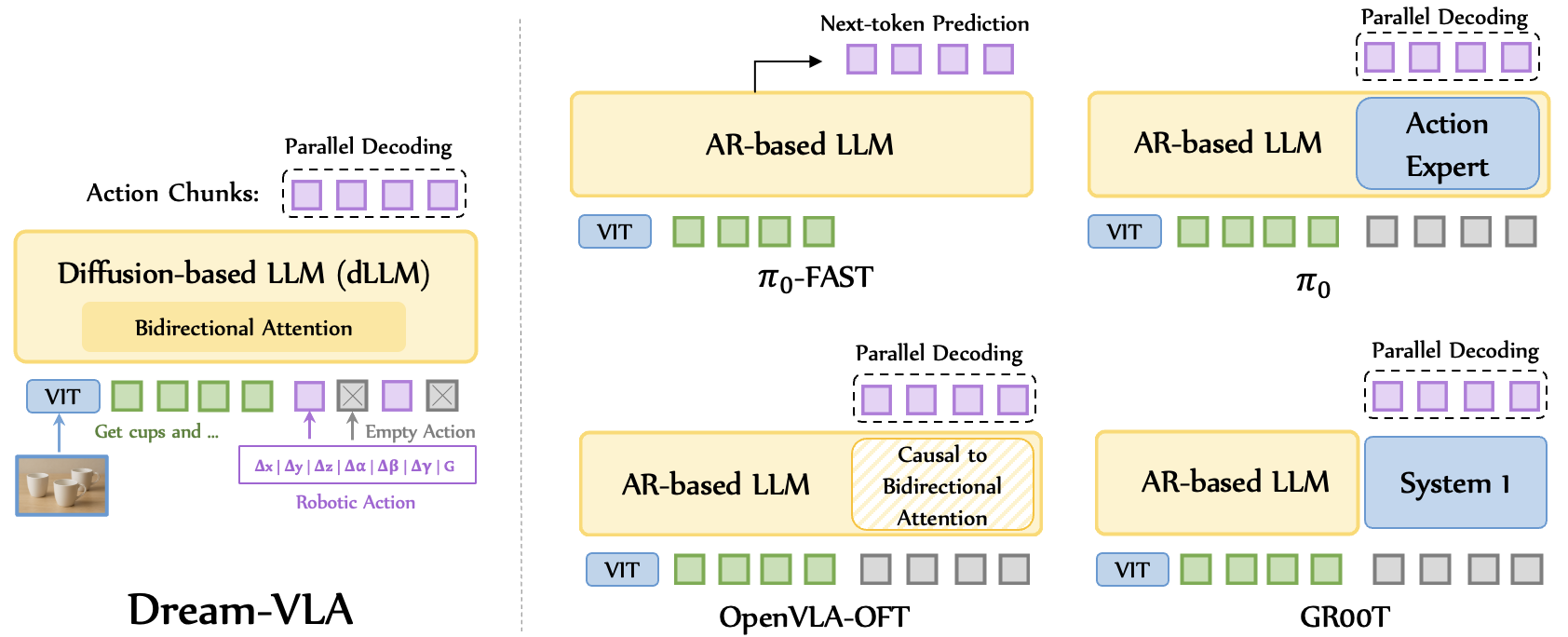

Building on the success of Dream 7B, we introduce Dream-VL and Dream-VLA, open VL and VLA models that fully unlock discrete diffusion’s advantages in long-horizon planning, bidirectional reasoning, and parallel action generation for multimodal tasks.

Key Results:

- Dream-VL: Achieves state-of-the-art performance among diffusion VLMs, comparable to top-tier AR VLMs trained on open data, with superior performance on visual planning tasks requiring long-horizon reasoning.

- Dream-VLA: Establishes top-tier performance with 97.2% average on LIBERO, 71.4% on SimplerEnv–Bridge, and 60.5% on SimplerEnv–Fractal, surpassing leading models including $\pi_0$

GR00T-N1 and OpenVLA-OFT . Consistently outperforms AR baselines across diverse finetuning objectives.

To facilitate further research in the community, we release the training codes and models in:

- Dream-VL model: Dream-org/Dream-VL-7B

- Dream-VLA model: Dream-org/Dream-VLA-7B

- Codebase: GitHub

Why dLLM for Vision-language models?

Vision-language models are reshaping AI across diverse domains – from medical diagnosis and scientific discovery to autonomous systems and robotic manipulation

Large vision-language models (VLMs) have demonstrated remarkable progress in grounding language with visual representations, powering applications from multimodal dialogue to scene understanding. Building on these foundations, vision-language-action (VLA) models extend these capabilities to action generation, enabling agents to interact with physical and virtual environments. However, current VL and VLA models overwhelmingly rely on autoregressive large language models (LLMs) as their backbone. This architectural choice presents a fundamental bottleneck: the autoregressive paradigm, trained on next-token prediction, struggles with tasks requiring long-horizon planning and global reasoning

Diffusion large language models (dLLMs) offer a compelling alternative to address these challenges

In summary, there could be at least three potential advantages of diffusion VLMs over autoregressive VLMs:

- Bidirectional attention enables richer information fusion between visual and text features;

- Text planning capability in dLLMs enhances visual planning in VLMs by generating global plans aligned with predefined goals;

- Native parallel generation support action chunking without architectural modification, which is crucial for efficient and effective low-level action prediction in VLA models.

Dream-VL

Built on Dream 7B

Next, what we are pretty interested is its potential on visual planning tasks. We consider two types of visual planning based on action granularity: high-level action planning that operates in a symbolic or semantic action space, and low-level action planning that focuses on precise control of robot behavior.

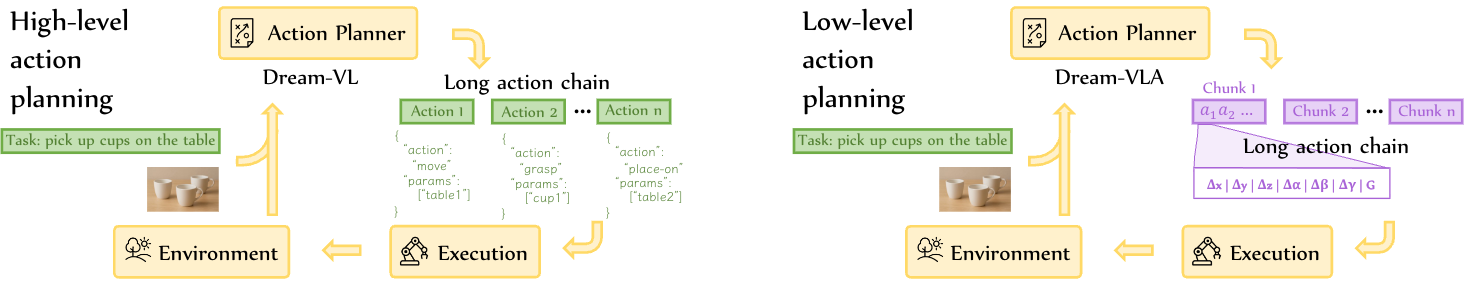

For high-level action planning, we consider ViPlanon, clear, inColumn), and Household, featuring everyday objects in complex spatial scenarios. Each domain includes three difficulty tiers (simple, medium, hard), varying in scene complexity, required plan length, and subtlety of visual cues. Beyond grounding relations in the image, the model is also required to produce structured, symbolic actions that are similar to function or tool calls. Thus, ViPlan evaluates a hybrid capability that blends vision-textual understanding and grounding, and precise symbolic planning. An example of the grounding and planning task is shown below.

Grounding mode

<system>

Answer the question about the given image with "Yes" or "No" only.

</system>

<user>

{image}

Question: Is the cabinet_1 in reach of the agent?

Answer:

"No"Planning mode

<system>

You are an expert planning assistant. You will be given an image which represents the current state of the environment you are in, a natural language description of the goal that needs to be achieved and a set of actions that can be performed in the environment.

Your task is to generate a plan that achieves the goal, in the form of a sequence of actions that need to be executed to reach the goal.

The format of your output should be a JSON object with the following structure:

```json

{

"plan": [

{

"action": action_name,

"parameters": {

parameter_name: parameter_value

}

},

... other actions ...

]

}

```

You will also receive feedback of the previously taken actions, with a note showing if they failed or not. If an action failed, think about why that could be and then output a new plan accordingly.

</system>

<user>

## Description of the environment

The environment is about colored blocks and how they relate to each other. In the environment, the blocks will be arranged in columns, spanning from left to right. Keep in mind that some of these columns can be empty with no blocks currently placed in them. Within a column one or multiple blocks of different colors can be stacked on top of each other. Your task is to correctly evaluate the question based on the image provided.

## Available actions

You have only one action available, called `moveblock(block, column)`. This action allows you to move a block from its current column to the specified column. In order to perform this action, the block you want to move must be the topmost block of its column and must not already be in the target column. If the action is valid, the block will be moved to the specified column and will be placed on top of any blocks that are already in that column, if any.

To refer to the blocks, use lowercase letters for the colors: 'r' for red, 'g' for green, 'b' for blue, 'y' for yellow, 'p' for purple, 'o' for orange. To refer to the columns, use the labels provided in the image, 'c1', 'c2', 'c3', 'c4' and 'c5'.

## Goal

The yellow block needs to be in the column with label 'c2'.

The purple block needs to be on top of the yellow block and needs to be in the column with label 'c2'.

The red block needs to be on top of the purple block, needs to be the topmost block in its column and needs to be in the column with label 'c2'.

## Previously taken actions

[{"action": "moveblock", "parameters": {"block": "y", "column": "c2"}, "outcome": "success", "info": "success"}]

## Current environment state

{image}

</user>

{

"plan": [

{

"action": "moveblock",

"parameters": {"block": "p", "column": "c2"}

},

{

"action": "moveblock",

"parameters": {"block": "r", "column": "c2"}

}

]

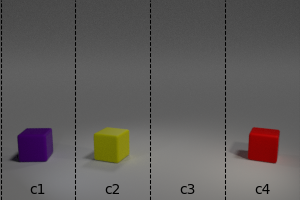

}We evaluate Dream-VL on the ViPlan benchmark in a zero-shot manner, comparing it against both autoregressive and diffusion-based vision-language models.

We find Dream-VL achieves better performance than another diffusion-based VLMs LLaDA-V across all evaluation modes, which can be attributed to the training strategy and difference of the base model. Under controlled comparison, Dream-VL outperforms autoregressive baselines (i.e., MAmmoTH-VL-7B) on most scenarios, highlighting the potential architectural advantages of diffusion-based modeling for grounding and high-level planning.

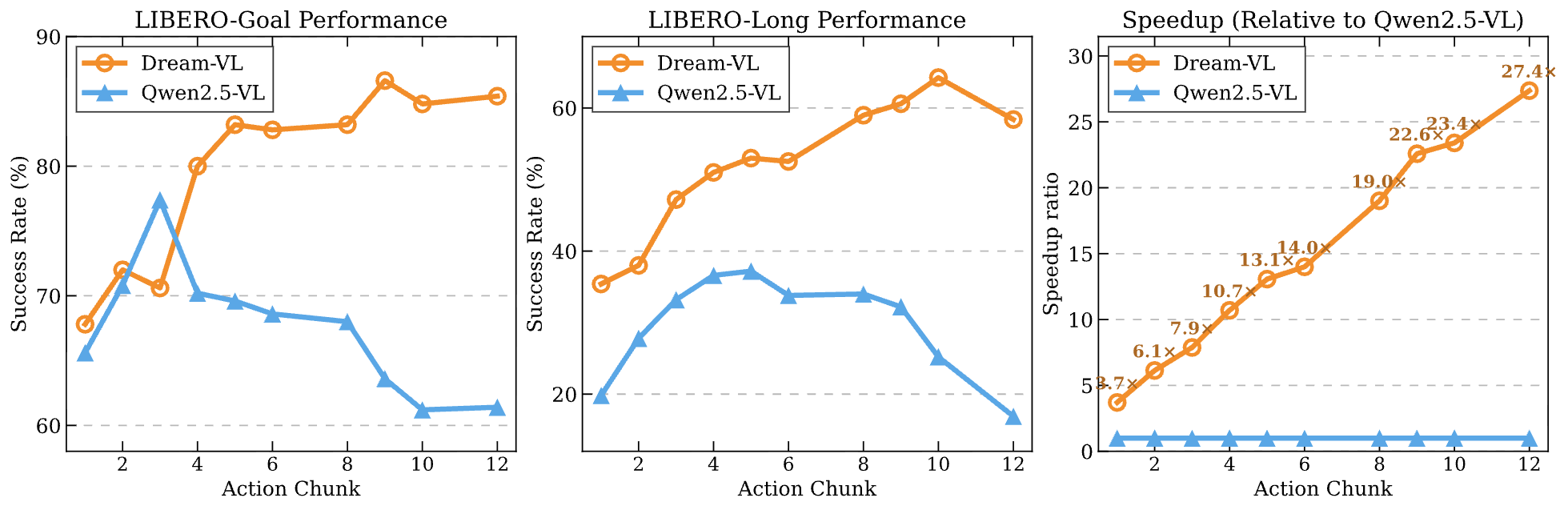

Besides high-level planning, we also conduct a preliminary study on the ability of low-level action planning, which focuses on generating fine-grained action sequences for robotic manipulation. Due to the large discrepency of data distribution between robotic action data and the multimodal data used to train Dream-VL, we finetune both Qwen2.5-VL and Dream-VL on the LIBERO training set with autoregressive loss and discrete diffusion loss, respectively.

The interesting thing is although Qwen2.5-VL generally exhibits stronger performance on standard vision-language benchmarks as shown previously, its performance in low-level action planning degrades a lot with larger chunk sizes due to error accumulation, while Dream-VL maintain robustness over longer horizons. Moreover, low-level action tokens have relatively low information density (e.g., many similar adjacent actions) and order constrains (e.g., among translation, rotation and gripper), which makes them particularly well-suited for parallel decoding. Regarding this, Dream-VL exhibits exceptional efficiency: it requires only a single diffusion step to achieve competitive performance, delivering a 27× speedup over autoregressive generation.

Dream-VLA

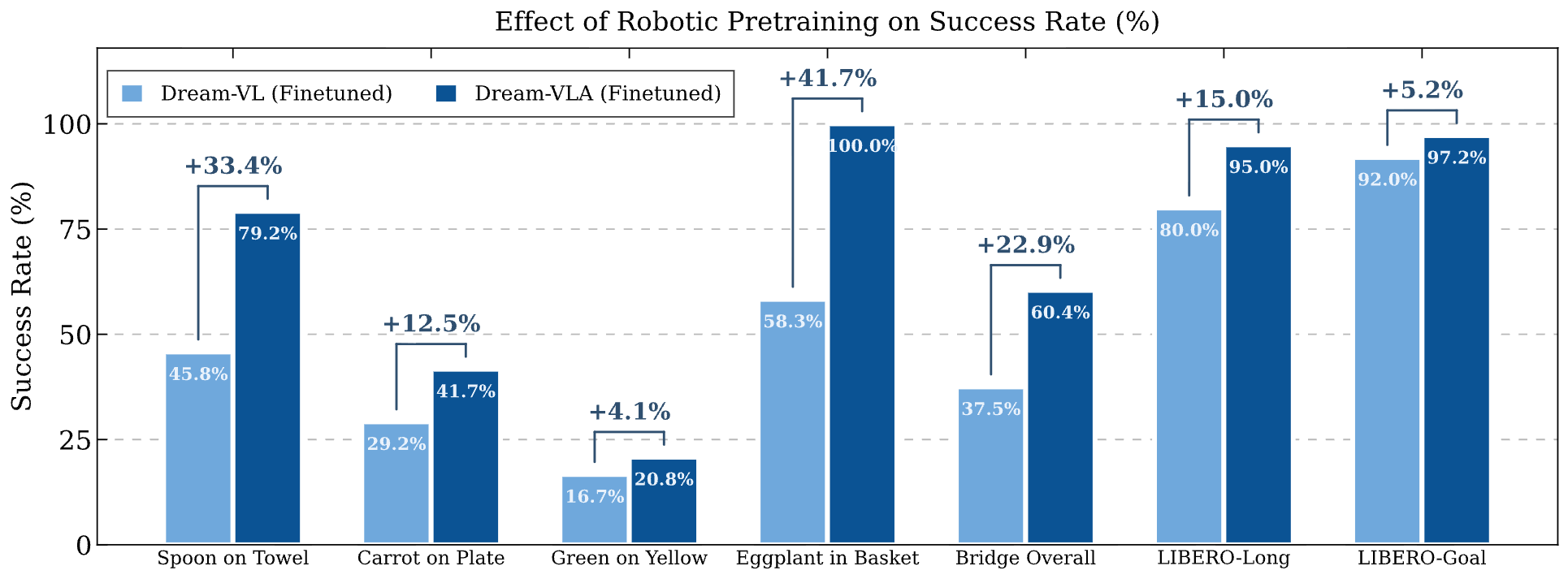

Given the potential of Dream-VL in low-level action planning, we further conduct a large-scale robotic pretraining over Dream-VL to obtain a general vision-language-action (VLA) model. Following OpenVLA

What can we get from Dream-VLA?

For AR-based VLA backbones, robotic pretraining or finetuning often requires structural modifications (e.g., attention mask adjustments in OpenVLA-OFT or adding action expert in $\pi_0$) to enable action chunking, which is critical as it enhances both inference efficiency and task success rates. One advantage of Dream-VLA, as a diffusion-based backbone, is that it inherently supports parallel decoding and action chunking without requiring architectural changes. In fact, the model architecture remains consistent starting from the LLM stage, thereby minimizing performance loss caused by structural variations across stages.

Below are the performance comparison between these VLA models on the standard LIBERO and SimplerEnv benchmarks.

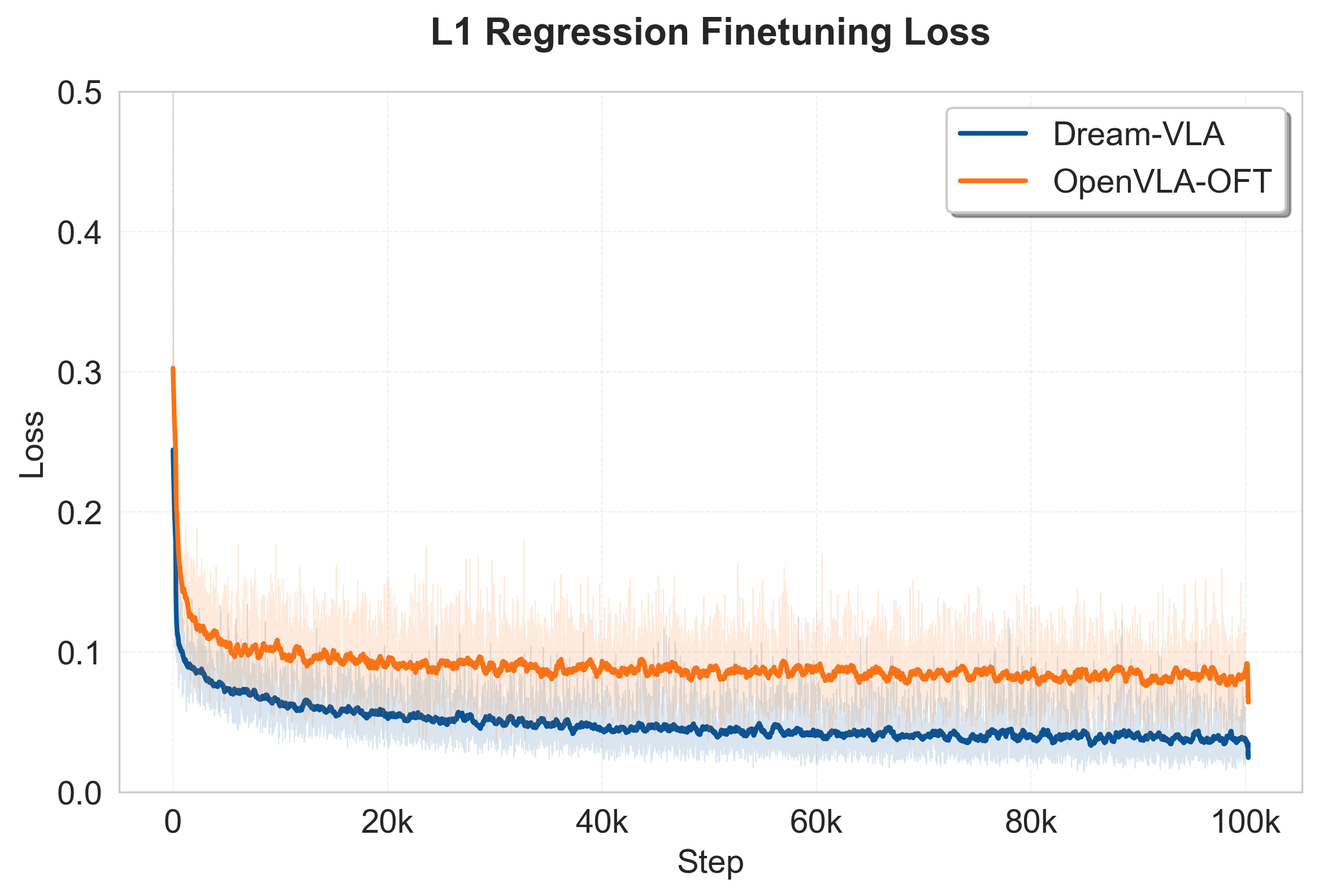

Even though we use a discrete diffusion loss for robotic pretraining, we found that during downstream SFT one can choose any loss. In fact, continuous losses (e.g., L1 regression or flow matching) can usually outperform discrete losses during finetuning because the original actions are continuous in nature. Across various finetuning objectives, we find that Dream-VLA achieves superior downstream performance compared to OpenVLA-OFT.

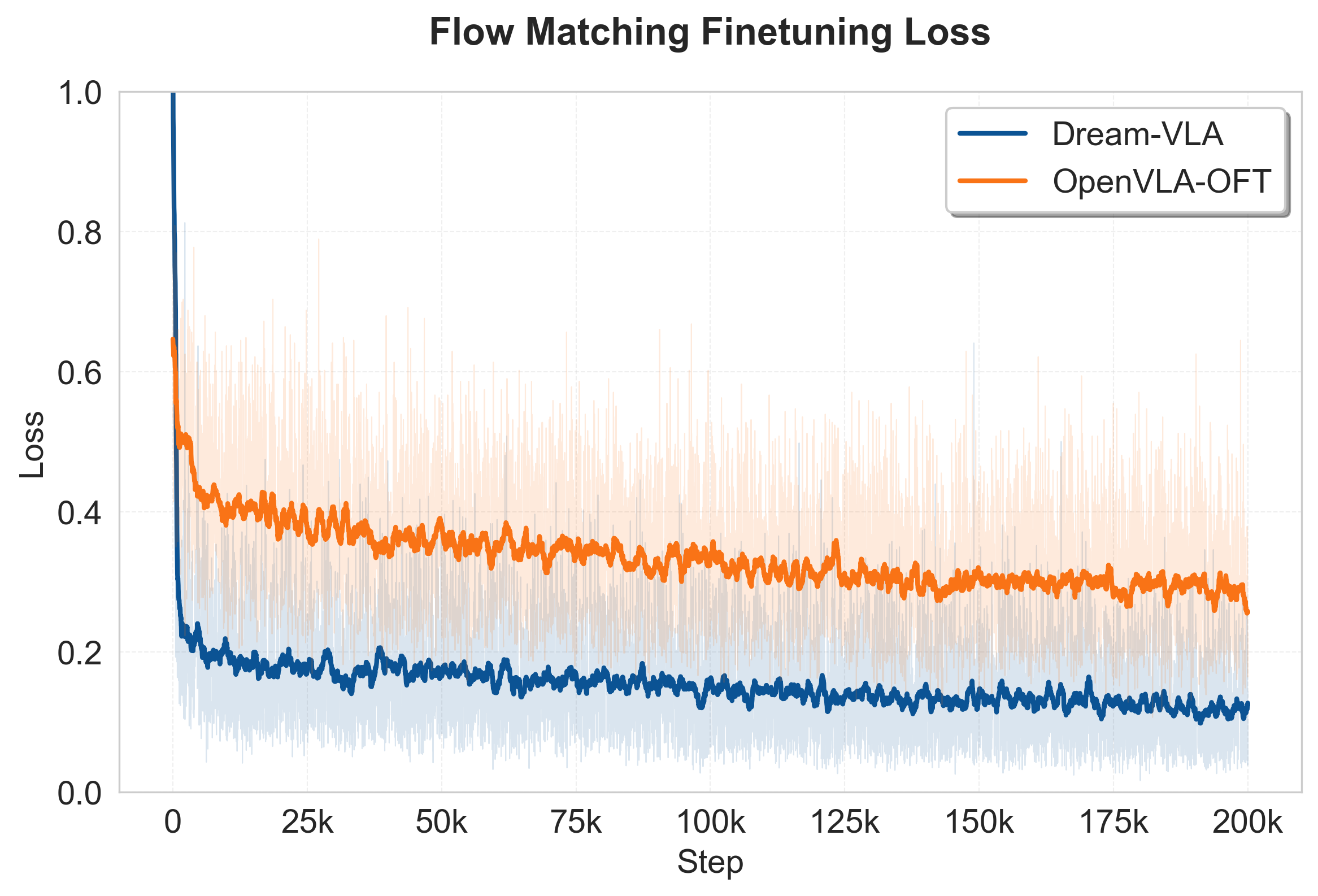

Besides, due to the absence of architectural modifications, we find the model tends to converge faster and achieves a lower loss in finetuning as compared to OpenVLA-OFT, especially in cases such as continuous diffusion or flow matching is used.

Below are some demos on both simulated and real world environments.

LIBERO Franka Robot

Real World PiPER Robot

Conclusion

We introduce Dream-VL and Dream-VLA as the preliminary exploration of diffusion LLMs for vision-language and vision-language-action tasks. Dream-VL achieves state-of-the-art performance among diffusion VLMs and demonstrates superior capabilities on visual planning tasks. Dream-VLA establishes top-tier performance on robotic manipulation benchmarks, consistently outperforming AR baselines across diverse finetuning objectives. However, there is still substantial room for improvement, and we release both models and codes to facilitate further research in diffusion-based multimodal models.

Citation

@article{ye2025dreamvla,

title={Dream-VL & Dream-VLA: Open Vision-Language and Vision-Language-Action Models with Diffusion Language Model Backbone},

author={Ye, Jiacheng and Gong, Shansan and Gao, Jiahui and Fan, Junming and Wu, Shuang and Bi, Wei and Bai, Haoli and Shang, Lifeng and Kong, Lingpeng},

journal={arXiv preprint},

year={2025}

}