DreamOn: Diffusion Language Models For Code Infilling Beyond Fixed-Size Canvas

A simple yet effective approach to unlock variable-length generation for diffusion language models.

Team: Zirui Wu*, Lin Zheng*, Zhihui Xie, Jiacheng Ye, Jiahui Gao, Yansong Feng, Zhenguo Li, Victoria W., Guorui Zhou , Lingpeng Kong

*: Equal Contribution

Affiliations: The University of Hong Kong, Kuaishou Technology, Huawei Noah’s Ark Lab, Peking University

Introducing DreamOn

In this post, we introduce a simple yet effective training method to unleash the full potential of diffusion language models for variable-length generation. Built upon existing masked diffusion models, our approach features

- Flexible generation from any-length sequences

- Simple and practical implementation with two special sentinel tokens for length control

- Easy finetuning on existing masked diffusion models

- Catch up with oracle-length performance on infilling tasks

Effective Variable-length Generation on Infilling

Although Diffusion Language Models (DLMs) have recently gained significant attention

This limitation makes it challenging for DLMs to tackle flexible generation in real-world applications, such as infilling, where the content length must be specified a priori. To illustrate, we evaluate the performance of our Dream-Coder-7B on code infilling tasks, where the model is asked to fill the missing span given a prefix and suffix context. When the given mask length does not align with the length of the canonical solution, it struggles to infill the code and pass@1 drops by 35.5% compared with oracle-length performance.

In this work, we present DreamOn (Diffusion Reasoning Model with Length Control), a novel discrete diffusion algorithm designed to address the variable-length generation challenge in code infilling. Our approach enables dynamic expansion and contraction of mask tokens during inference, providing flexible length control without requiring predetermined canvas sizes.

We believe that enabling variable-length sequence generation opens new avenues for DLMs, unlocking their potential for more sophisticated applications including adaptive prompting, flexible infilling, and seamless editing workflows, particularly in programming contexts where content length is inherently unpredictable.

DreamOn: Masked Diffusion with Augmented States

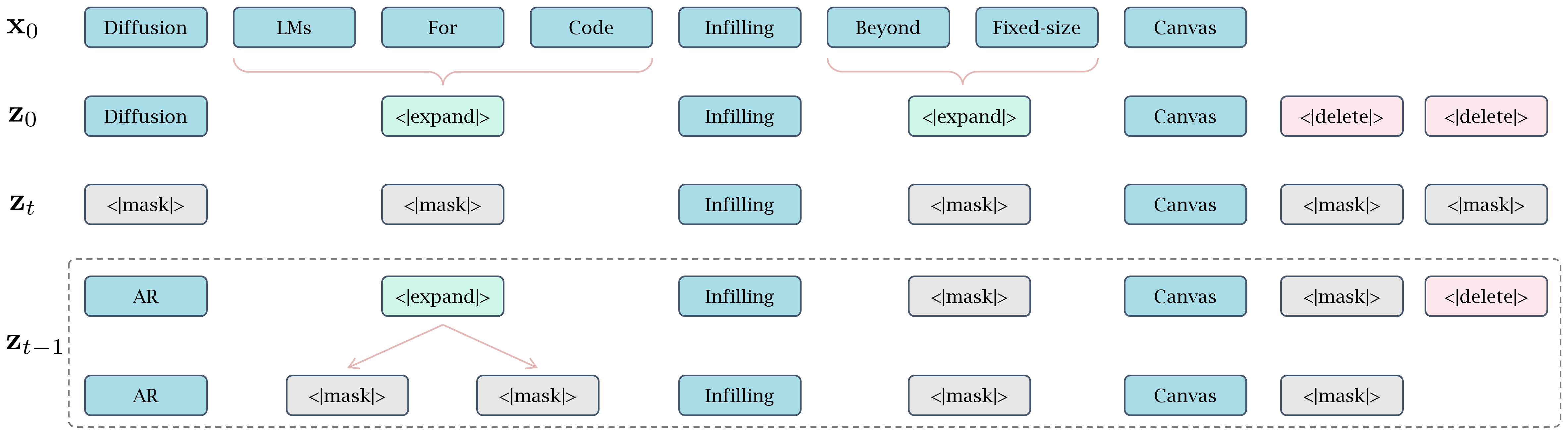

DreamOn extends standard masked diffusion models <|expand|> and <|delete|> to enable precise length control. We define them in such a way that in the forward diffusion process, tokens in both <|expand|> and <|delete|> are always transmitted to <|mask|> ; and during the backward process, <|expand|> is deterministically expanded into two <|mask|> tokens at the same position, while <|delete|> is removed from the sequence. This design allows the model to dynamically adjust sequence length.

To train the model with these special states, we construct an auxiliary sequence $\mbz_0$ from each original sequence $\mbx_0$ by 1) randomly merging token spans in $\mbx_0$ into <|expand|> , and 2) inserting a random number of tokens with state <|delete|> . As illustrated in the diagram below, $\mbz_0$ typically differs in length from $\mbx_0$. We then train the masked diffusion model on $\mbz_0$ instead of diffusing over $\mbx_0$, and by doing so, the model learns to denoise not only regular tokens but also special states from <|mask|>, thus achieving effective variable-length generation.

Implementation

Similar to <|mask|> in masked diffusion models, we define the introduced states <|expand|> and <|delete|> as special sentinel tokens in the tokenizer vocabulary, and train the model to denoise them just as if they were regular tokens. This formulation is appealing due to its ease of implementation — requiring no changes to the model architecture and supporting straightforward fine-tuning from pretrained masked diffusion models.

Training

To construct $\mbz_0$, instead of merging and inserting tokens before masking (as demonstrated in the diagram above), we first apply a mask noising process over $\mbx_0$, followed by random merges of consecutive <|mask|> tokens to form <|expand|> and random insertion of <|delete|> tokens. This sampling scheme provides greater control over the noise level and the balance between length control and generation quality (see Section Analysis).

We design two heuristic schedulers for constructing <|expand|> tokens. (1) Static scheduler merges adjacency <|mask|> tokens with a fixed merging probability $p_{merge}=0.5$. (2) Dynamic inverse scheduler merges adjacent <|mask|> with a probability that is inversely proportional to the number of <|mask|> tokens in the sequence. We find that mixing the two schedulers leads to the best performance in practice.

We fine-tune DreamCoder-7B on the education instruct subset of opc-sft-stage2 from OpenCoder. This subset contains 110k instruction-solution pairs synthesized from seed data of high educational value. For infilling tasks, we randomly split the solution into prefix, middle, and suffix. We treat the instruction, prefix, and suffix of the solution as fixed, and only diffuse over tokens in the middle. In this case, we found it suffices to learn effective sequence contraction by appending a random number of <|delete|> tokens at the end of the middle section. We downweight the loss of predicting <|delete|> tokens to avoid overfitting.

Inference

During inference, our model shows little difference from the original masked diffusion denoising, except that at each iteration, when a <|expand|> token is predicted from <|mask|> , we expand it into two <|mask|> tokens in the same position; and when a <|delete|> token is predicted, we simply remove it from the sequence. This is a crude heuristic that greedily expands or contracts sequence length at each step; however, we found it performed effectively and robustly in infilling tasks.

Evaluation

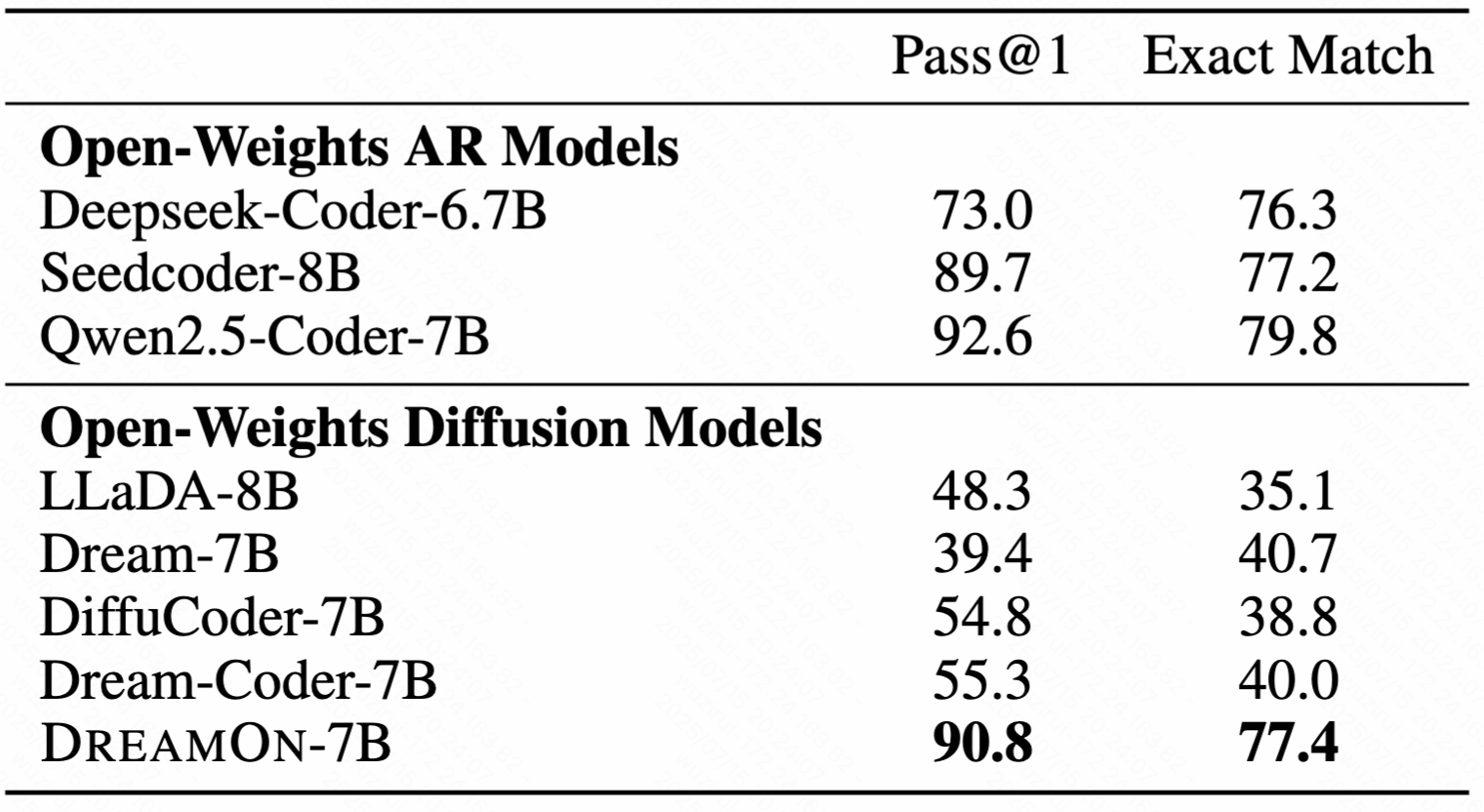

We evaluate our model on HumanEval-Infilling and the Python subset of Santacoder-FIM. We report pass@1 for HumanEval-Infilling and exact match for Santacoder-FIM following official evaluation scripts. We evaluate our model with different initial mask lengths to assess its generalizability in length control. We also evaluate the performance under oracle length, with expansion and deletion disabled for this setting, to monitor the infilling capabilities for fixed-size canvas.

Our results demonstrate that DreamOn achieves significant improvements over other diffusion models that lack variable-length generation capabilities in code infilling, approaching the performance of leading open-source autoregressive language models that are trained with infilling objectives.

Analysis

Performance Breakdown

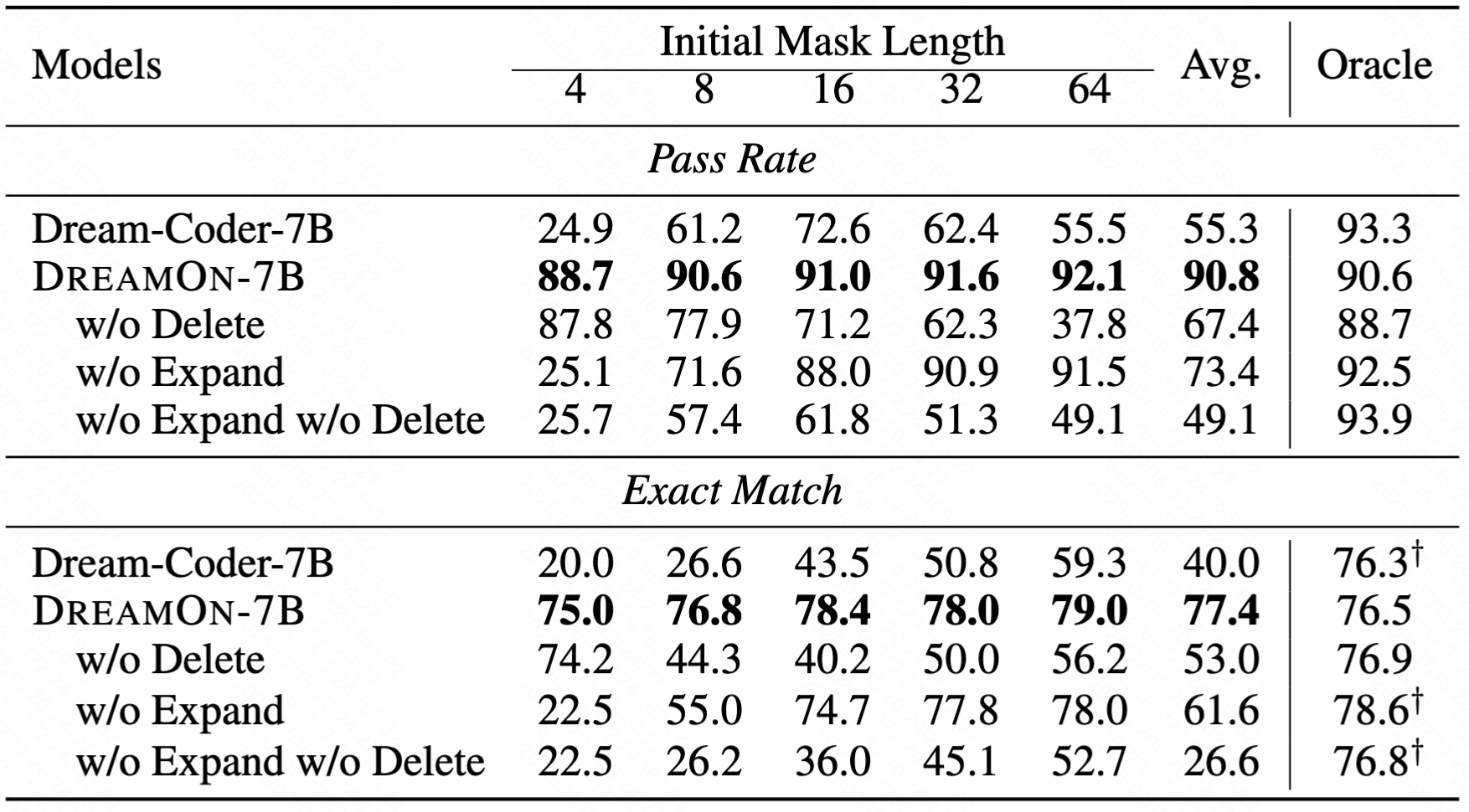

We perform ablation studies on our expansion and contraction design to show the effectiveness of our approach. Performance with different masked token lengths shows that DLMs trained on infilling without sentinel tokens exhibit poor performance, particularly on short sequences, while those incorporating both expansion and deletion achieve the highest pass@1 across all lengths. The combination of both mechanisms leads to a substantial improvement in pass rate (90.8% average) and exact match accuracy (73.9% average), approaching oracle performance.

Exact match scores highlight that our approach leads to perfect token alignment with the ground truth regardless of the length of the infilling span. Our approach offers a text diffusion process that is no longer limited to fixed-length sequences and can adapt to variable-length generation tasks at scale, such as infilling.

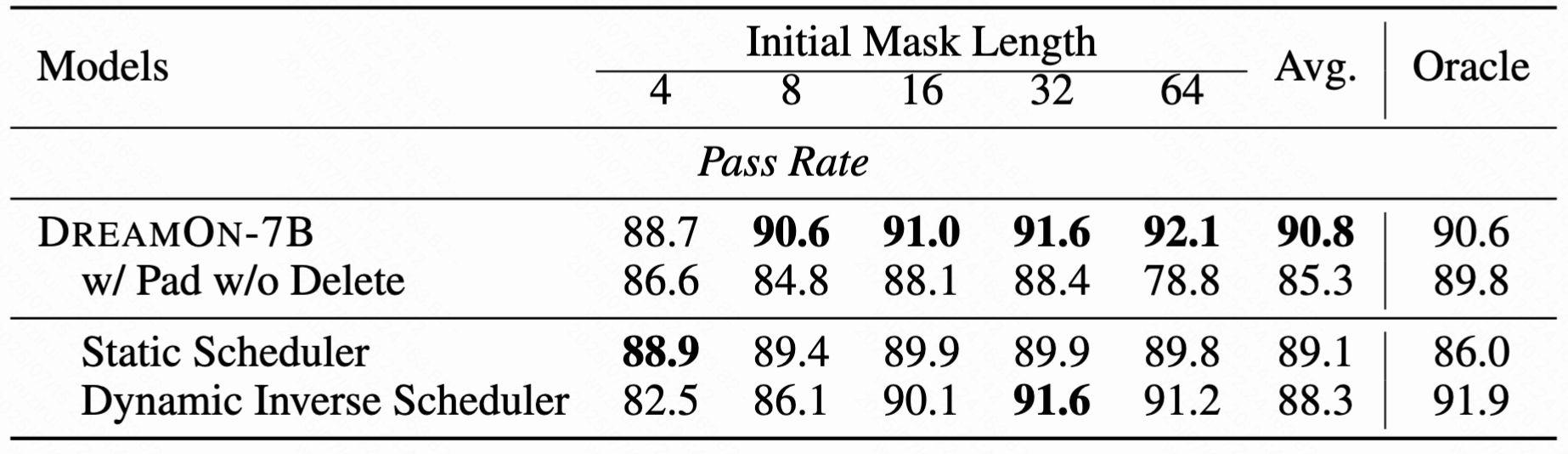

Padding vs. Deletion

Current DLMs often use <|pad|> tokens to fill at the end of the sequence, which can be viewed as a special form of length cutoff control. The predicted <|pad|> tokens are kept in the sequence as input for the next step of denoising. We experiment with training our model while keeping <|delete|> tokens in the prompt and do not remove them from the sequence. The presence of <|delete|> tokens introduces potential distraction into the denoising process, which could disrupt attention patterns or token-position alignments, especially when deletions are frequent or irregular. Our design of <|delete|> tokens offers greater flexibility and effectiveness for dynamic length control

Choice of Merging Scheduler

Balancing between the degree of length control and the quality of generation is crucial for robust variable-length generation. We train our model with static or dynamic inverse scheduler separately to study their effect on generalizability.

The static scheduler offers a higher degree of length control, achieving the highest pass rate when the mask length is extremely short. However, it leads to lower quality generation compared with other schedulers. Large number of regular tokens that are replaced with <|expand|> tokens during training and have negative effects on language modeling abilities. The dynamic inverse scheduler addresses this problem by downweighting merged <|expand|> tokens. But it has poor expanding performance when the mask length is too short. Therefore, we mix the two schedulers to balance length control and quality of generation.

Conclusion

It remains challenging for non-autoregressive generative models to generate variable-length sequences. Prior research has explored several strategies to address this, such as learning a separate length prediction module

In contrast, our approach introduces only two special tokens into the tokenizer vocabulary—requiring no changes to the model architecture or the loss objective. This leads to a simple and scalable implementation that remains effective on infilling tasks. Notably, DreamOn even achieves code infilling performance comparable to that with oracle length, highlighting its capability to handle variable-length generation.

This blog post presents our preliminary results on variable-length generation with DLMs. Future work will explore extensions beyond fill-in-the-middle (FIM) tasks and further improve training and inference strategies.

Citation

@misc{Dreamon2025,

title = {DreamOn: Diffusion Language Models For Code Infilling Beyond Fixed-size Canvas},

url = {https://hkunlp.github.io/blog/2025/dreamon},

author = {Wu, Zirui and Zheng, Lin and Xie, Zhihui and Ye, Jiacheng and Gao, Jiahui and Feng, Yansong and Li, Zhenguo and W., Victoria and Zhou, Guorui and Kong, Lingpeng}

year = {2025}

}